(This post is part 1 of a series trying to understand the evolution of the web and where it could be heading)

For most of the people in tech, the blockchain/crypto/NFTs/Web 3.0 have been an annoying buzz in the background for the last few years.

The whole idea of spending a couple of hours to (probably) perform an atomic commit into a database while burning a couple of millions of trees seemed quite stupid to start with. Even stupider when you realize it’s not even a commit, but a hash of hashes of hashes of hashes confirming an insertion into a database somewhere.

Financially speaking, the only business model of the giants of this new web seemed to be either slapping that database on anything it could be slapped on and expecting immediate adoption, or a straight-out Ponzi scheme (get it now, it’s going to the moon, tomorrow would be too late!!1!1!).

Oh, and the cherry on the top is that the whole hype machine around Web 3.0 proceeded to blissfully ignore the fact that Web 3.0 has already been popularized – and by no one other than Tim Berner-Lee almost a decade ago, referring to the semantic web.

Now that the whole house of cards is coming crumbling down, the sheer stupidity of crypto Web 3.0 seems self-evident.

And yet, VCs who should have known better have poured tens of billions into it, and developers with stellar reputations have also jumped on the hype train. People who were around for Web 1.0, .com burst, Web 2.0, and actually funded and built the giants for all of them seemingly drank the cool-aid.

Why?

Abridged opinionated history of the InterWebs

Web 1.0

The initial World Wide Web was all about hyperlinks. You logged into your always-on machine, wrote something in the www folder of your TCP/IP server directory, about something you cared about, while hyper-linking to other people’s writing on the topic, and at the end hit “save”. If your writing was interesting and someone found it, they would read it. If your references were well-curated, readers could dive deep into the subject and in turn hyperlink to your writing and to hyperlinks you cited.

If that sounds academic, it is because it is. Tim Berner-Lee invented Web 1.0 while he was working at CERN as a researcher. It’s an amazing system for researchers, engineers, students, or in general a community of people looking to aggregate and cross-reference knowledge.

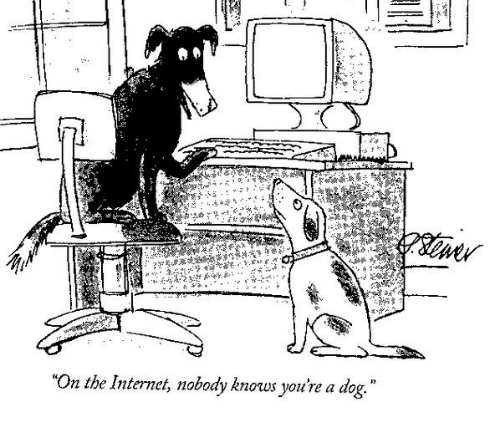

Where it starts to fall apart, is when you start having trolls, hackers, or otherwise misbehaving users (hello.jpg).

However, what really broke Web 1.0 was when it got popular enough to hit the main street and businesses tried to use it. It made all the sense in the world to sell products that were too niche or for a physical store – eg. rare books (hello early Amazon). However, it was an absolute pain for consumers to find and evaluate the reputation of businesses on the internet.

Basically, as a customer looking for a business, your best bet was to type what you wanted into the address bar followed by a .com (eg VacationRentals.com) and hope you would get what you wanted or at least a hyperlink to what you wanted. As a business looking for customers, your best bet was in turn to try to get the urls people would likely be trying to type looking for the products you were selling.

Aaaand that’s how we got a .com bubble. The VacationRentals.com mentioned above sold for 35M at the time, despite the absence of a business plan, except for the certainty that someone will come along with a business plan and be willing to pay even more, just to get the discoverability. After all, good .com domains were in a limited supply and would certainly only get more expensive, right?

That definitely doesn’t sounds like anything familiar…

Google single-handedly nuked that magnificent business plan.

While competing search engines (eg AltaVista) either struggled to parse natural language queries, to figure out the best responses to them, or to keep up to date in the rapidly evolving Web, Google’s combination of PageRank to figure the site reputability and bag-of-words match to find ones the user was looking for Just Worked(TM). As a customer, instead of typing into an address bar a url you could just google it, and almost always one of the first 3 results would be what you were looking for, no matter the url insanity (eg AirBnBcom). Oh, and on top of it, their algorithms’ polishing made sure that you could trust your results to be free of malware, trolls, or accidental porn (thanks to Google’s company porn-squashing Fridays at Google).

As a business, your amazing .com domain was all of a sudden all but worthless and what really mattered was the top spot in Google search results. The switch of where discoverability budgets were going led to the .com bubble bust, and Google’s AdWords becoming synonymous with marketing on the internet. If you ever wondered how Google got a quasi-monopoly on ads online, you now know.

While Google has solved the issue of finding businesses (and incidentally knowledge) online, the problem of trust remained. Even if you got customers to land your website, it was far from guaranteed that they trust you enough to share their credit card number or just perform a wire to an account they’ve never seen before.

Web of Trusted platforms emerged around and in parallel to Google but really became mainstream once the latter allowed the products on them to be more easily discovered. Amazon with customer reviews, eBay, PayPal, … – they all provided the assurance that your money won’t get stolen, and if the product won’t arrive or would not be as advertised, they would take care of returns and reimbursement.

At this point, the two big issues with Web 1.0 were solved, but something was still missing. The thing is that for most people it’s the interaction with other peoples that matter. And with Web 1.0 still having been built for knowledge and businesses, that part was missing. Not only it was missing for users, but it was also a business opportunity, given that word-of-mouth recommendations are still more trusted than ones from an authority – no matter how reputable, and that users can reveal much more about their interests while talking to friends rather than with their searches.

Web 2.0

If Web 1.0 was about connecting and finding information, Web 2.0 was all about building walled gardens providing a “meeting space” while collecting the chatter and allowing businesses to slide into the chatter relevant to them.

While a few businesses preceded it with the idea (MySpace), it was really Facebook that hit the nail on the head and managed to attract enough of the right demographic to hit the jackpot with brands and companies wanting access to it. Perhaps part of the success of the virality was the fact that they started with two most socially anxious and least self-censoring communities: college and high-school students. Add the ability to post pictures of the parties, tag people in them, an instant chat that was miles ahead of any competition when it came to reliability (cough MSN messenger cough), sprinkle some machine learning on top and you had hundreds of millions pairs of attentive, easy to influence eyeballs, ready to sell to advertisers with little to no concern about user’s privacy or feelings (Are you missing ads for dating websites on FB when you changed your status to “it’s complicated”? or ads for engagent rings if you changed it to “in a relationship”?).

However the very nature of Facebook also burnt out their users and made them leave for other platform. You ain’t going to post your drunken evenings for your grandma to see. Nor will you want to keep in touch with that girl from high school who went full MLM. Or deal with the real-life drama that was moving onto that new platform: nightly posts about how much he hated his wife from your alcoholic oncle, your racist aunt commenting on your cousin’s pictures including a black guy in a group, …

And at that point Facebook switched from the cool hip kid to the do-all weirdo with a lot of cash, that kept buying out newer, cooler platforms – Gowalla, Instagram, What’s App, VR, … At least until anti-trust regulation stepped in when they tried to get to Snapchat.

Specialized social media popped up everywhere Facebook couldn’t or wasn’t yet moving into. LinkedIn allowed people to connect for their corporate needs. Yelp, Google Maps, and Foursquare allowed people to connect over which places to be. Tumblr, Pinterest, 9gag, and Imgur allowed people to share images – from memes to pictures of cats and dream houses. Or just generally be weird and yet engaging – such as Reddit and Twitter.

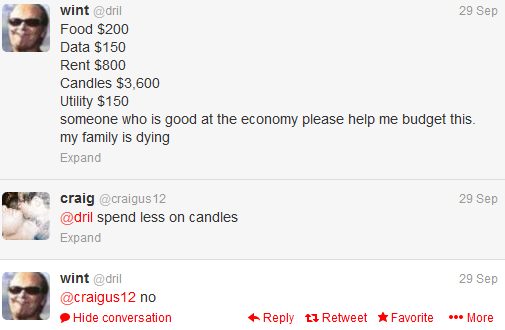

However, most of the social networks after Facebook had a massive problem – generating revenue. Or more specifically attracting businesses into advertising on them.

The main reason for that is that to attract enough advertisers, they need to prove they have enough reach and that their advertisement model is good enough to drive conversions. And for that, you need a maximum outreach and a maximum amount of information about the people your advertisement goes to. So unless you are going with Facebook and Google, you either need to have a really good idea of how a specific social media works or you pass through ad exchanges.

In other terms, you and your users are caught in a web of ads and as a social media, you are screwed both ways. Even if you are rather massive, there might not be enough advertisers to keep you afloat with your service still remaining usable. Despite its size and notoriety, Twitter failed to generate enough revenue for profitability, even 16 years in. YouTube, despite being part of the Google ecosystem is basically AdTube for most users. If you pass through ad exchange networks, you are getting a tiny sliver of ad revenue that’s not sufficient to differentiate yourself from others or drive any development. Oh, and nothing guarantees the ads that will get assigned to you will be acceptable to your userbase and not loaded with malware. Or conversely that the advertisers’ content won’t show up next to content they don’t want to be associated with their brand, at all.

The problem is not only that this model had razor-thin margins, but it was also increasingly brought under threat by the rising awareness about privacy issues surrounding the ad industry, and the use of information collected for ads against them. Not only for reclusive German hackers concerned about the NSA in the wake of Snowden’s revelations but also for anyone paying attention, especially after the Cambridge Analytica scandal.

Web of Taxed Donations (or world of microtransactions) was the next iteration to try to break out of it.

A lot of social media companies noticed through their internal analytics that not all users are equal. Some attract much more attention and amass a fandom (PewdiePie). Some of those fans are ready to pay some good money to get the attention of their idol (Bathwater). Why add the middleman of ads they don’t want to see anyway rather than allow them to do direct transfers and tax them? Especially if you are already providing them a kickback from ad revenue (because if you aren’t, they are leaving and taking their followers with them)?

Basically, it’s like Uber, but for people already doing creative work in cyber-space (content generators). Or, for people more familiar with gaming – microtransactions. Except instead of a questionable hat on top of your avatar, you are getting a favor from your favorite star. Or remove an annoyance in the way between you and them, such as an ad. Or get a real-world perk, such as a certificate or a diploma.

EdX started the move by creating a platform ot much providers and consumers for paid online classes and degrees. YouTube followed by adding Prime and Superchats, Twitch – subscriptions and cheers, and Patreon decided to roll in and provide a general way to provide direct donations. However, it was perhaps OnlyFans, completely abandoning any ads in favor of direct transactions that really drove the awareness of that income model (and accelerated Twitter’s downfall), while Substack did the same for high-quality writing.

The nice thing about that latter model is that it generated money. Like A LOT of money.

The less nice thing is that you have to convince creators to come to your platform, competing with platforms offering a better share of revenue, and then you need to convince people to sign-up / purchase tokens from your platforms. All while competing with sources funded by ad revenue and the money people spend on housing food, transportation, …

Basically, as of now web 2.0 is built around convincing the user to generate value on your platform and then taxing it – be it by them watching ad spots you can sell to businesses, or directly loading their hard-earned cash onto your platform, to stop annoyance, show they are cooler or donate it to creators.

Now, if we see the web as a purely transactional environment and strip it of everything else, you basically get a “bank” with “fees”. Or a “distributed ledger” with “gas fees”

Crypto Web 3.0

And that’s the promise of Crypto Web 3.0. The “big idea” is to make any internet transaction a monetary one. A microtransaction. Oh, you want to access a web page? Sure, after you pay a “gas fee” to its owner. You want to watch a video? Sure, after you transfer a “platform gas fee” for us to host it and a “copyright gas fee” to its creator.

Using cryptographic PoW-based blockchain for transactions when the “creators” were providing highly illegal things was far from stupid. You can’t have a bank account linked to your identity in case someone will rat you out, or the bank realizes what you are using money for. Remember Silk Road? Waiting a couple of hours for the transaction to clear and paying a fee on it that would make MasterCard, Visa and PayPal salivate was an acceptable price to get drugs delivered to your doorstep a couple of days later. Or get a password to a cache of 0-day exploits.

However, even in this configuration, the crypto had a weak point – exchanges. Hackers providing a cache of exploits have to eat and pay rent and electricity bills. Drug dealers have to pay the supplier, but also eat and live somewhere.

So you end up with exchanges – places that you trust would give you bitcoins someone is selling for real cash and when you want to cash out they will exchange them for real money. Basically Banks. But with none of the safety a bank comes with. No way to recover stolen funds, no way to discover the identity of people who stole them, no way to call Interpol on them, no insurance on the deposits – nothing.

You basically trust your exchange to not be the next Mt. Gox (good luck with that) and you accept it as a price of doing shady business. It’s questionable, and probably a dangerous convenience, but it is not entirely dumb.

What is entirely dumb, however, is trying to push that model mainstream. Those hours to clear a transaction? Forget about it – people want their coffee and bagel to go delivered in seconds. Irreversible transactions? Forget it – people send funds to the wrong address and get scammed all the time, they need a way to contest charges and reverse them. Paying 35$ to order a 15$ pizza in transaction fees? You better be kidding, right? Remembering seed phrases, tracking cold wallet compatibility, and typing 128 char hexadecimal wallet addresses? Forget it, people use 1234546! as a password for a reason and can’t even type correctly an unfamiliar name. Losing their deposited money? Now that’s absolutely out of question, especially if that’s any kind of more or less serious account.

So basically you end up with “reputable” major corporations that do de-facto centralized banking and promise that in the background they do blockchain. At least once a day. Maybe. Maybe not, it’s not like there are any regulations to punish them if they don’t. Nor with any guarantees for standard banks – after all the transactions are irreversible, frauds and “hacks” happen and it’s not like they are affected by the FIDIC 250k insurance. Oh, and they are now unsuited for any illegal activity because regulators can totally find, reach and nuke them (cf Tornado with Russian money after the start of war in Ukraine).

Oh, and on top of all of that, every small transaction still burns about a million trees worth of CO2 emissions.,

Pretty dumb.

But then it got dumber.

The NFTs.

Heralded as the poster child for everything good there was going to be about Web 3.0, they were little more than url pointers to jpeg images visible to anyone and not giving any enforceable rights of ownership. Just an association of a url to a wallet saved somewhere on a blockchain. Not like it was benefitting the artist either – basically the money goes to whoever mints them and puts them for an auction, artist rights be damned.

And yet somehow it worked. At the hype peak, NFTs were selling for tens of millions of dollars. Not even 2 years later, it’s hundreds of USD, at best. Not very different from beanie babies. But probably people getting them weren’t around for the beanie babies craze (made possible by eBay btw).

And VCs in all of that?

Silicon Valley invests in a lot of stupid things. It’s part of VCs mentality over there. And I don’t mean it in a bad way. They start out on the premise that great ideas look very stupid at first, right until they don’t. Very few people would have invested in a startup run by a 20-year-old nerd as a tool for college bro creeps to figure out which of their targets was single, or just got single, or was feeling vulnerable and was ripe to be approached. And yet when Facebook started gaining traction on campuses, VCs dumped a bunch of money into it to create the second biggest walled garden of Web 2.0 (and enable state-sponsored APT to figure out ripe targets for influence operations).

However, Silicon Valley VCs also are aware that a lot of ideas that look very stupid at first are actually just pretty stupid in the end. That is why they invest easily a bit, not rarely a lot, expecting 95-99% of their portfolio to flop.

And yet despite the shitshow FTX was, it still got 1B from VCs (if I am to trust Cruncbase, at a 32B valuation).

Perhaps it’s Wall Street golden boys turned VCs? Having fled investment banking after the 2008 collapse, they had the money to find a new home in Silicon Valley, make money with fintech and move on to being VCs themselves?

That would make sense – seeing crypto hit the main street during its 2017 peak, and seeing no regulations in place after it all crashed, the temptation to pull every single trick in the book that was made illegal by SEC in traditional finance would be pretty high. With households looking for investment vehicles for all the cash they amassed during lockdowns, crypto could be presented well as it was being pumped, at least as long as it could be made to look like it was going to the moon.

There is some credibility to that theory – massive crypto dumps were synchronized with FED base rate hikes to a day, in a way highly suggestive of someone with a direct borrowing line to FED to get leverage.

And yet it can’t be just it. The oldest and most reputable Silicon Valley VCs were onboard with the crypto hype train, either raising billions to form Web 3.0 investment funds or suggesting buying one coin over others.

But why?

While I am not inside the head of the investors with billions at their fingertips, my best guess is that they see the emerging taxed transactions Web 3.0 as ready to go and the first giant in it ready to displace major established markets – in the same way Google did for .com domains with its search.

Under the assumption that the blockchain was indeed going to be the backbone of that transactional web, and given the need for reputable actors to build a bridge with the real cash, it made sense that no price was too high to be onboard of the next monopoly, and a monopoly big enough to disrupt any major player on the market – be they ad-dependent or direct donation dependent.

But given the limitations of the blockchain, that latter assumption seemed… A bold bet to say the least. So maybe they also saw a .com bubble 2.0 coming up and decided to ride it the proper way this time around.

The curious case of hackers and makers.

While it makes sense that VCs wanted a crypto Web 3.0, it also attracted a number of actual notable makers – developers and hackers whose reputation was not to be made anymore. For instance, Moxie Marlinspike of Signal fame at some point considered adding blockchain capability to it. While he and a lot of other creators later bailed out, pointing out the stupidity of Web 3.0, for a while their presence lent the whole Web 3.0 credibility. The one crypto-fans still keep clawing to.

But why?

Why would makers be interested in transactional web? Why would they give any thought to using blockchain, despite having all the background to understand what it was? There are here to build cool things, even if it means that little to no revenues get to them, with the Linux kernel being the poster child for the whole stance.

Well.

The issue is that most makers and creators eventually realize that you can’t live on exposure alone and once a bunch of people start using your new cool project, you need to find money to pay your salary to work on it full time, salaries of people helping you and eventually servers running it, if it’s not self-hosted.

And the web of untaxed donations (or web of gifting) will only take you so far.

Wikipedia manages to rise what it needs to function yearly from small donations. But that’s Wikipedia. And Jimmy has to beg people 2-3 times a year for donations by mail.

Signal mostly manages to survive off donations, but on several occasions, the new users inflow overwhelmed the servers, before the volume of donations caught up with the demand, with the biggest crunches coming right around the moments when people need the service the most – such as at the start of the Russian invasion of Ukraine early this year.

Mastodon experienced it first hand itself this year, as waves after waves of Twitter refugees brought most instances to their knees, despite this specific case having been the original reason for Mastodon’s existence.

That scaling failure is kinda what happened to Twitter too. After Musk’s takeover and purges, Jack Dorsey went on record saying that his biggest regret was to make a company out of Twitter. He is not wrong. His cool side project outgrew his initial plan, without ever generating enough revenue to fund the expenses on lawyers when they decided to protect activists on their platform during the 2011 Arab Spring, then moderation teams in the wake of 2016 social platform manipulation for the US presidential election, content moderation in 2020-2022 to counter disinformation around COVID pandemic, or sexual content moderation in late 2021 and 2022. A non-corporate Twitter would have never had the funds to pull all those expenses through. (And even a lot of corporations try to hide the issue by banning any research on their platforms).

It kinda would make sense in a way that he now is trying to build a new social media, but this time powered by crypto and in web 3.0.

Not that the donations don’t work to fund free-as-in-freedom projects. There is just so much more that could be funded and so much more reliably if there was a better mechanism for a kickback to fund exciting projects. One that would perhaps have avoided projects shutting down or going corporate despite neither the developers, maintainers, or users really wanting it.

From that point of view, it also makes sense that the payment system underlying it is distributed and resilient to censorship. Signal is fighting off censorship and law enforcement, and going after its payment processor is one of the fastest ways to get it.

So it kinda makes sense, and yet…

Into the web of gifting with resilient coordination?

Despite the existence of Web 3.0 alternatives, when Twitter came crashing down, people didn’t flock to them, they flocked to Mastodon, caring little about its bulky early 2010s interface, intermittent crashes, and rate limits as servers struggled to absorb millions after millions after millions of new arrivals.

In the same way, despite not having the backing of a major publisher or professional writers, Wikipedia not only outlasted Encyclopedia Britannica, it became synonymous with current, up-to-date and reliable knowledge. Arguably, Wikipedia was even a Web 2.0 organization way before Web 2.0 was a thing, despite being unapologetically Web 1.0 and non-commercial. After all it just set the rules for nerds to have passionate debates about who was right while throwing citations and arguments and each other, with the reward being to have their worlds chiseled for the world to see and refer to.

And for both of them, it was the frail web of gifting that choked the giants in the end. In the same way, as Firefox prevailed over Explorer, Linux prevailed over Unix, Python prevailed over Matlab and R prevailed over SAS.

Perhaps because the most valuable aspect of the web of giving is not the monetary gifts, it’s the gift of time and knowledge by the developers.

Signal could have never survived waiting for a decade before it got wide-spread adoption, if it had to pay salaries of the developers of the caliber that contributed and supported it. Wikipedia could have never paid the salaries of all the contributors, moderators and admins for all the voluntary work they did. Linux is being sponsored by a number of companies to add features and security patches, but the show is still ran by Linus Torvalds and the devs mailing list. Same for Python and R.

It’s not only the voluntary work that’s a non-monetary gift. Until not too long ago people were donating their own resources to maintain projects online without a single central server – torrenting and Peer-to-Peer. Despite a public turn towards centralized solutions with regulations and ease-to-use, it’s still the mechanism by which Microsoft distributes Windows updates and it’s the mechanism by which PeerTube works.

Perhaps more interestingly, we now have pretty good algorithms to build large byzantine-resilient systems, meaning a lot of shortcomings with trust Peer-to-Peer had could now be addressed.

It’s just that blockchain ain’t it.

We might get a decentralized Web 3.0.

It just won’t be a crypto one.