Among the most frustrating languages I’ve encountered so far, Mathematica definitely ranks pretty high. Compared to it, R, the master troll of statistical languages pales in comparison. At the moment of writing this post I’ve just spend two hours trying to wrap a function that I manage to make work in the main namespace into a Module that I would call with given parameters. Not that I am a beginner programmer, or that I am not familiar with LISP and symbolic languages or meta-programming. Quite to the opposite. Despite an awesome potention and regular media attention, Mathematica is an incredibly hard language to properly program in, no matter what your background is.

Impossible functionalization.

So I’ve just spend two hours trying to re-write three lines of code I was already using as a stand-alone notebook. In theory (according to Mathematica), it should be pretty simple: define a “Method[{variables, operations}]”, and replace operations with the commands from my notebook I would like to encapsulate and variables with variables I would like to be able to change in order to modify the behavior of my code.

The problem is that never worked. And no matter how in depth I was going into the documentation of the Method[.., ..] and individual commands I was going, I could not figure out why.

You have an error somewhere, but I won’t tell where

One of the main reasons for frustration and failure on the way of debugging. Mathematica returns error WITHOUT STACK, which means that the only thing you get is the name of the error and the link towards the official documentation that explains where the error might come from in very general terms (20 lines or less).

The problem is that since your error most likely won’t occur until the execution stack hits the internals of other functions, by the time your error is raised and returned to you, you have no freaking idea of:

a) Where the error was raised

b) What arguments raised it

c) What you need to do get to the desired behavior

And since the API/implementation of individual functions is nowhere to be found, your best chance is to start randomly changing your code until it works. Or go google different combination of your code and/or errors, hoping that someone already run into an error similar to yours in similar conditions and found out how to correct it.

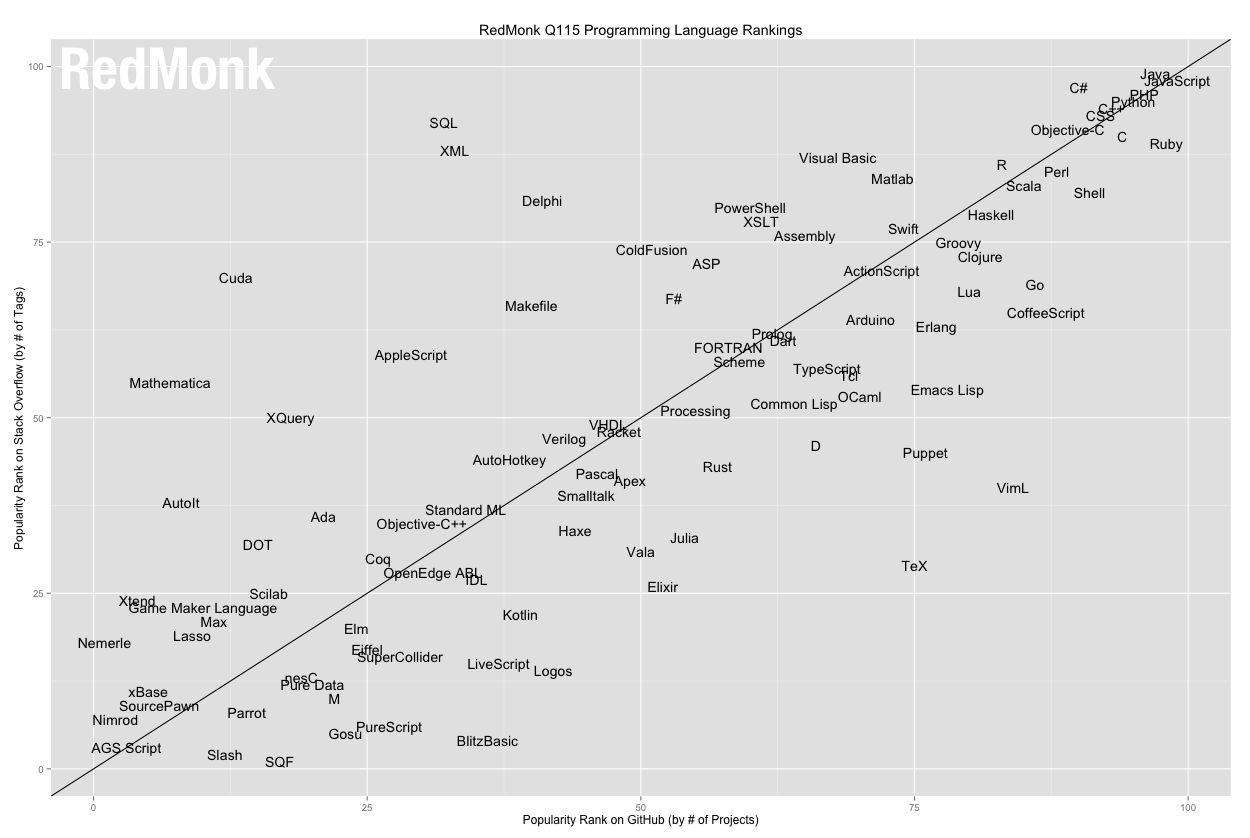

Which actually really blows out of proportion the ration of questions asked about Wolfram Language compared to the output it provides:

Yup. The only programming language to have its own, separate and very active stack exchange, and yet REALY, REALY, inferior compared to MATLAB and R, its closest domain-specific cousins. Actually with regard to output it provides it is buried among the languages you’d probably never heard about.

You might have an error, but I won’t tell you

In addition to returning stackless errors, Mathematica is a fail-late language, which means it will try to convert and transform the data silently to force it through the function until it fails. This two error management techniques on their own are already pretty nasty and have been cleaned away from most commonly used languages, so their combination is pretty disastrous on its own.

However, Mathematica does not stop there in further making error detection a challenge. Mathematica has several underlying basic operation models, such as re-writing, substitution or evaluation, which correspond to the same concepts, but do very different things to exactly same data. And they are arbitrarily mixed and NEVER EXPLICITLY MENTIONED IN THE DOCUMENTATION.

Multiple basic operations is what makes this language powerful and suited for abstraction and mathematical computation. But since they are arbitrarily mixed without being properly documented, the amount of error they generate and debugging they require is pretty insane and offsets in a large part the comfort they provide.

No undo or version control

Among the things that are almost as frustrating as the Mathematica errors is the execution model of Wolfram language. Mathematica workbooks (and hence the code you are writing) are first-class objects. Objects on which the language reasons on itself and which might get modified extensively upon execution. Which is an awesome idea.

What is much less awesome is the implementation of that idea. In particular the fact that the workbook can get modified extensively upon execution means that reconstructing what the code looked like before the previous operation might be impossible. So Mathematica discards the whole notion of code tracking.

Yes, you read it right.

Any edits to code are permanent. There is also absolutely no integration with version control, making an occasional fat-finger error of delete-evaluate a critical error that will make you loose hours of work. Unless you have 400 files to which you’ve “saved as” the notebook every five minutes.

You just don’t get it

In all this leaves a pretty consistent impression that language designers had absolutely no consideration for the user, valuing much less user’s work (code) then theirs, and showing it in the complete absence of safeguards of any kind, proper error tracking or proper code modification tracking. All of which made their work of creating and maintaining the language much easier at the expense of making user’s work much, much harder.

A normal language would get over such initial period of roughness and round itself by a base of contributors and a flow of feed-back from users. However Mathematica is a closed-source language, developed by a selected few, who would snob user’s input and instead of improving the language based on the input would persist in explaining to those trying to provide them feedback how the users “just don’t get it”.

For sure, Mathematica has a lots of great power to it. Unfortunately this power remains and will remain inaccessible to the vast majority of the commoners because of impossible syntax, naming convention and debugging experience straight from an era where just pointing to a line of code where the error occurred was waaay beyond the horizon of possible